AI in Healthcare: Building Trust for Better Outcomes

- Introduction to AI in Healthcare

- The Importance of Trust in Healthcare

- Ethical Principles Guiding AI in Healthcare

- Data Privacy and Security Measures

- Transparency and Explainability

- Patient Outcomes and AI Effectiveness

- Educating Stakeholders about AI in Healthcare

- Challenges in Building Trust in AI Healthcare Applications

- The Future of Trust in AI-Driven Healthcare

Introduction to AI in Healthcare

The integration of Artificial Intelligence (AI) into the healthcare sector has significantly transformed the landscape of patient care and medical practice. As healthcare systems around the globe embrace technology, AI applications have emerged as pivotal tools in enhancing diagnostic accuracy, treatment efficiency, and patient engagement. Currently, diverse AI technologies are being utilized within healthcare environments, providing substantial benefits to both practitioners and patients.

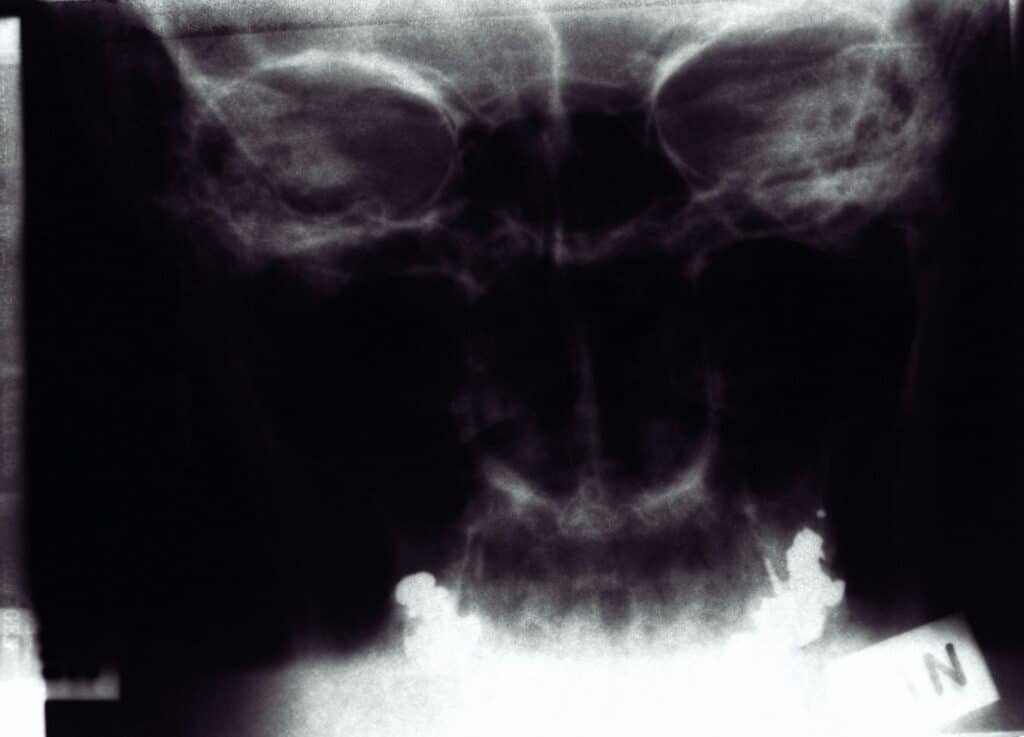

One prominent application of AI in healthcare is through diagnostic algorithms. These algorithms utilize machine learning to analyze medical images, lab results, and patient history, thereby assisting healthcare professionals in diagnosing diseases with greater precision. For instance, AI-driven tools are being employed to identify anomalies in radiology and pathology, leading to quicker and more reliable diagnoses.

Another significant area where AI is making an impact is virtual health assistants. These intelligent systems offer patients personalized guidance, medication reminders, and assistance with administrative tasks. By providing 24/7 support, virtual health assistants help patients manage their health more effectively while reducing the workload on healthcare providers. Such technologies not only improve patient access to care but also enhance the overall patient experience.

Furthermore, predictive analytics is becoming increasingly integral in patient care. By leveraging vast amounts of health data, AI applications can foresee potential health risk factors and identify trends that might otherwise go unnoticed. These predictive capabilities enable healthcare organizations to implement proactive measures, thus improving health outcomes and reducing costs.

In summary, the current landscape of AI in healthcare is marked by a range of applications that are redefining traditional medical practices. The importance of these technologies extends beyond operational efficiencies; they play a crucial role in building trust, enhancing patient experiences, and ultimately improving health outcomes. As we delve deeper into the topic, it is essential to explore how these AI applications are fostering human confidence in healthcare systems.

The Importance of Trust in Healthcare

Trust is a fundamental pillar of effective healthcare delivery and plays an essential role in fostering positive patient-provider relationships. In the healthcare environment, patients are often in vulnerable situations, requiring not only medical treatment but also emotional support. The presence of trust allows patients to communicate openly with their healthcare providers, facilitating accurate diagnoses and tailored treatments. When patients feel confident in their providers, they are more likely to share relevant information regarding their health, adhere to treatment plans, and engage in preventive care.

Research has shown that a strong sense of trust can significantly enhance patient outcomes. Trust reduces anxiety and promotes satisfaction with the care received, ultimately leading to improved health results. A prospective study demonstrated that patients who have high levels of trust in their healthcare providers experience greater adherence to medication regimens and are more likely to attend follow-up appointments. This adherence is linked to improved management of chronic diseases and greater overall patient well-being.

However, the absence of trust can have dire implications for healthcare outcomes. For instance, a study examining the impact of mistrust found that patients who do not trust their providers are less likely to seek care or follow medical advice, resulting in delays in treatment and poorer health outcomes. Additionally, in communities where mistrust in the healthcare system is prevalent, such as among marginalized groups, the consequences can be even more severe, leading to worsening health disparities and decreased access to care.

Real-life case studies further highlight the vital importance of trust in healthcare. For example, the Tuskegee Study serves as a historical reminder of how a breach of trust can lead to widespread harm and lasting repercussions in community relationships with healthcare providers. Trust is not merely a nicety; it is a necessity that shapes the quality of healthcare delivery and influences patient health trajectories.

Ethical Principles Guiding AI in Healthcare

As artificial intelligence (AI) technologies increasingly permeate the healthcare sector, the ethical principles that guide their development and implementation are paramount in ensuring public trust and acceptance. Key frameworks such as beneficence, non-maleficence, autonomy, and justice serve as foundational pillars in shaping responsible and ethically sound AI practices.

Beneficence, characterized by actions that promote the well-being of patients, mandates that AI applications in healthcare should aim to enhance patient outcomes. This principle encourages the development of AI tools that support clinical decisions, materialize improved diagnoses, and foster timely interventions, ultimately aiming at better health results.

Non-maleficence, often articulated as the principle of “do no harm,” reinforces the ethical obligation of healthcare providers and AI developers to ensure that the implementation of AI does not adversely affect patients. Safeguarding against errors, bias, or misuse of AI technologies is vital, as these factors can lead to significant harm, undermining the very trust that healthcare relies upon. Ensuring the accuracy and reliability of AI algorithms is essential to uphold this principle.

Autonomy emphasizes the importance of respecting individual patient rights and choices. AI applications must be designed to facilitate informed consent, allowing patients to make knowledgeable decisions regarding their treatment options. It is imperative that AI does not diminish the core human elements of healthcare, such as empathy and shared decision-making, which foster patient autonomy.

Finally, the principle of justice addresses the equitable distribution of healthcare resources. AI tools must be developed and deployed with an awareness of social and economic disparities to avoid exacerbating existing inequalities. Ensuring that all patients, regardless of background, have equal access to the benefits of AI-driven healthcare is essential for promoting equitable health outcomes.

Data Privacy and Security Measures

In the realm of healthcare, protecting patient information is paramount, particularly as artificial intelligence (AI) applications become increasingly prevalent. These applications often utilize vast amounts of sensitive data, including medical records, demographic details, and even genetic information. Hence, the importance of implementing robust data privacy and security measures cannot be overstated for maintaining the trust of patients and healthcare providers alike.

Regulations such as the Health Insurance Portability and Accountability Act (HIPAA) establish strict guidelines for the handling of patient data. HIPAA mandates that healthcare entities protect personal health information (PHI) through various means, including data encryption and secure data storage practices. Compliance with such regulations is essential in fostering confidence among users of AI applications in healthcare, as they ensure that sensitive information is only accessed by authorized personnel.

Technological measures play a crucial role in safeguarding data privacy. For instance, encryption transforms data into unreadable formats for unauthorized individuals, while anonymization removes personally identifiable information from datasets used in AI training. These strategies not only protect individual privacy but also enhance the credibility of AI solutions in healthcare. By demonstrating a commitment to these privacy principles, healthcare providers can reassure patients regarding the security of their data.

Despite these measures, incidents of data breaches have occurred, undermining confidence in healthcare systems. Notable examples include the breach of major health insurers and hospitals that exposed millions of patient records. The repercussions of such violations are significant, leading to financial losses, regulatory penalties, and a crisis of trust. Thus, it underscores the urgent need for continuous improvement in data security practices as AI technologies evolve in the healthcare sector.

Transparency and Explainability

In the rapidly evolving domain of healthcare, the integration of artificial intelligence (AI) applications has underscored the paramount importance of transparency and explainability. For AI systems to effectively function in providing patient care, it is essential that both healthcare providers and patients possess a clear understanding of how these systems operate. Transparency refers to the clarity with which AI processes and outcomes are communicated, while explainability is the degree to which the internal workings and decision-making criteria of AI models can be understood by humans. These two attributes are pivotal in fostering trust, acceptance, and confidence in AI’s role in healthcare.

AI systems often operate using complex algorithms that analyze vast amounts of data to produce their outputs. As such, making these outputs interpretable for healthcare stakeholders calls for specific approaches. One effective method involves the use of visualizations that elucidate the variables influencing AI decisions. For instance, presenting predictive models through intuitive graphical interfaces helps medical professionals comprehend the reasoning behind AI-generated recommendations. Additionally, the employment of model-agnostic frameworks, such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), has gained traction. These techniques can help dissect intricate models, offering insight into how different input factors contribute to a particular prediction.

Moreover, clear communication regarding the limitations and potential biases inherent in AI systems serves to empower healthcare stakeholders, equipping them to make informed decisions. By fostering a culture of openness and active engagement regarding AI technologies, healthcare professionals can alleviate concerns and encourage patients to embrace AI-assisted care. In this context, understanding AI’s functionalities is not merely an academic exercise but a vital prerequisite for enriching patient experience and enhancing health outcomes.

Patient Outcomes and AI Effectiveness

The integration of Artificial Intelligence (AI) in healthcare has emerged as a powerful tool in enhancing patient outcomes and subsequently building trust among patients and healthcare providers. Several studies indicate that AI applications are instrumental in reducing mortality rates, optimizing treatment accuracy, and improving overall patient satisfaction.

For instance, a 2020 study published in the Journal of Medical Internet Research demonstrated that AI-assisted diagnostic tools have decreased misdiagnosis rates by approximately 30%, particularly in high-stakes areas like oncology. When AI models analyze vast datasets, including medical histories and imaging reports, they provide clinicians with insights that often surpass human capability in terms of speed and accuracy. This enhanced decision-making process contributes to timely and effective treatments, which ultimately leads to better patient outcomes.

Furthermore, AI’s ability to monitor patient conditions in real-time has proven critical in managing chronic diseases. For example, wearable devices powered by AI algorithms track vital signs and alert healthcare providers to any anomalies, leading to proactive interventions. According to a study from Harvard University, patients utilizing AI-driven health monitoring systems reported a 25% reduction in emergency room visits, signifying both improved health management and increased trust in AI applications.

Testimonials from healthcare professionals reinforce these findings. Dr. Sarah Thompson, an oncologist, reported, “AI tools have transformed my practice. The accuracy of treatment recommendations has significantly increased, and my patients express greater trust in the care they receive.” Similarly, patient feedback indicates that those treated with AI-supported systems feel more hopeful about their health prospects, cultivating a stronger trust in both their healthcare providers and the technologies employed.

In essence, as data underscores the positive impact of AI on patient outcomes, it becomes increasingly apparent that these advancements not only enhance the effectiveness of healthcare interventions but also strengthen the trust essential for successful patient-provider relationships.

Educating Stakeholders about AI in Healthcare

The integration of artificial intelligence (AI) into the healthcare sector represents a significant advancement, but it necessitates a well-informed audience to harness its full potential effectively. Educating healthcare professionals, patients, and the general public about AI technologies is crucial in fostering understanding and building trust. Healthcare professionals require comprehensive training programs that not only cover the technical aspects of AI applications but also clarify their operational implications and ethical considerations. This can be achieved through tailored workshops and continuous education courses that emphasize hands-on experience with AI tools, ensuring that practitioners are well-equipped to utilize these technologies in their daily operations.

For patients, the dissemination of information through easily accessible online resources can demystify AI applications in healthcare. Creating user-friendly guides, webinars, and informative videos can empower patients to navigate their health data and understand the role AI can play in personalized medicine, diagnostics, and treatment options. Additionally, establishing community outreach programs helps to create dialogues between healthcare providers and the public. Such initiatives can include public forums and seminars that invite discussions and answers to questions regarding AI’s impact on patient care and outcomes.

Moreover, it is vital to utilize social media platforms to share real-time information and updates about AI developments in healthcare. By collaborating with influencers or respected medical professionals, awareness campaigns can reach broader audiences, breaking down complex AI concepts into digestible information for all stakeholders. This multifaceted approach to education encourages a transparent environment, enhancing the relationship between AI technology and healthcare stakeholders. Ultimately, as understanding and confidence grow, so too will the acceptance of AI solutions in enhancing healthcare delivery.

Challenges in Building Trust in AI Healthcare Applications

As healthcare continues to evolve through technological advancements, the integration of artificial intelligence (AI) applications raises several challenges regarding trust. One significant barrier is the fear of job displacement among healthcare professionals. Many individuals worry that reliance on AI could lead to a diminished role for human practitioners, creating resistance to adoption. This apprehension is compounded by concerns about the effectiveness of AI in aiding diagnoses or treatment decisions. Trust in AI systems is, therefore, intertwined with the assurance that these tools will enhance rather than replace human expertise.

Another challenge that healthcare organizations face is skepticism regarding the capabilities of AI technologies. The perception that AI lacks the nuanced understanding required for patient care can lead to hesitance in its implementation. Clinicians often question whether AI can genuinely interpret medical data with the same accuracy as seasoned professionals. This skepticism is exacerbated by occasional high-profile failures of AI systems, which can undermine confidence in their application across various healthcare settings.

Furthermore, the digital divide presents a formidable challenge in building trust. Not all healthcare providers have equal access to advanced technologies or the necessary resources to implement AI applications effectively. This disparity can create a system where only certain populations benefit from AI advancements, leading to inequities in care. Addressing this digital divide requires targeted efforts from stakeholders to ensure that all healthcare providers have the necessary infrastructure and training to leverage AI effectively.

To overcome these challenges, it is essential to foster collaboration between AI developers and healthcare professionals. Developing policies that emphasize transparency, accountability, and robust data security can help in building trust among stakeholders. Additionally, continuous education and training programs should aim to mitigate fears surrounding job displacement and empower healthcare professionals to embrace AI as a collaborative tool, thereby enhancing both confidence and capability in AI healthcare applications.

The Future of Trust in AI-Driven Healthcare

The integration of artificial intelligence (AI) into healthcare is at a critical juncture where establishing trust is paramount for its success. As technology continues to evolve, the future of AI in healthcare will depend substantially on the stakeholders’ collective effort to foster a trustworthy environment. Trends emerging in this space suggest that patient-centric AI solutions will likely dominate the landscape, further enhancing the rapport between healthcare providers and patients.

One notable innovation on the horizon is the development of AI systems tailored to individual patient needs, which incorporates not just data analysis but also considers patient preferences and experiences. As these systems become more intuitive and personalized, they are designed to create a deeper understanding of patient requirements. This shift towards patient-centric AI can significantly enhance trust, as patients will likely feel more involved in their care decisions.

Moreover, ethical frameworks and regulatory measures will play an essential role in shaping the landscape of AI in healthcare. With increasing scrutiny on data usage, transparency, and accountability, compliance with established guidelines will become crucial for the deployment of AI technologies. The involvement of diverse stakeholders, including ethicists, regulators, and healthcare professionals, will ensure that trust remains at the forefront of AI development.

As we move forward, it is vital to recognize that trust in AI-driven healthcare will be built not only on technological advancements but also on a commitment to ethical standards and patient engagement. By fostering open communication and demonstrating the reliability of AI applications, the healthcare sector can create a robust framework where both patients and providers can thrive, ensuring that trust is not only earned but is also sustained.