How to Use DeepSeek-V3 for Enhanced Data Search

Introduction to DeepSeek-V3

DeepSeek-V3 represents a significant advancement in the realm of data search technologies, harnessing the power of artificial intelligence to enhance data retrieval processes across various applications. In an era characterized by an exponential growth of digital information, the necessity for efficient and effective data search solutions has never been greater. DeepSeek-V3 addresses this challenge by combining innovative algorithms with user-centric design to facilitate rapid and accurate data access.

The platform is designed to accommodate a wide spectrum of data types and sources, ensuring comprehensive coverage within organizations’ databases. By employing AI-driven semantic search capabilities, DeepSeek-V3 transcends traditional keyword-based search limitations, allowing users to find relevant information not solely based on exact matches but also through context and meaning. This is particularly crucial in a landscape where raw data is often overwhelming and disorganized, thus requiring sophisticated tools that can streamline the search process.

Additionally, DeepSeek-V3 integrates machine learning techniques that refine search results over time, adapting to user behavior and preferences. This dynamic adaptability ensures that the platform continually enhances its performance, thereby providing users with increasingly relevant results. Such functionalities are essential not only for corporate environments dealing with extensive data sets but also for any individual or entity seeking effective solutions in the vast digital landscape.

Moreover, the significance of efficient data retrieval extends beyond mere convenience; it is critical for informed decision-making, innovation, and maintaining a competitive edge. By revolutionizing how we interact with and access information, DeepSeek-V3 positions itself as a vital tool in optimizing data search efforts, embodying the intersection of technology and intuition in the pursuit of knowledge.

Key Features of DeepSeek-V3

DeepSeek-V3 represents a significant advancement in data search technology, driven by artificial intelligence (AI) algorithms designed to enhance the efficiency and accuracy of data retrieval. One of the standout features of this system is its AI-driven algorithms that employ machine learning techniques. These algorithms not only categorize data effectively but also learn from user interactions, continuously refining their search capabilities. For instance, users might notice improved result relevancy over time as the system adapitates to their particular search patterns and preferences.

Another notable feature of DeepSeek-V3 is its advanced filtering options. Users can customize searches with multiple filters, enabling them to sift through vast amounts of data effortlessly. This enables precise search results, whether a user is searching through academic databases, corporate records, or other large datasets. For example, a researcher can filter results by date, relevance, or source type, ensuring that the information retrieved is not only accurate but also applicable to their specific needs.

The user-friendly interface of DeepSeek-V3 contributes significantly to the overall user experience. With a clean design and intuitive navigation, users can easily initiate searches without a steep learning curve. The interface guides users through the various features, minimizing the time spent on understanding how to utilize the system effectively. This streamlined approach allows for a more focused and productive data search experience.

Finally, DeepSeek-V3 boasts robust integration capabilities with other data systems, making it versatile for diverse applications. This feature enables organizations to connect DeepSeek-V3 with existing databases, content management systems, and other data sources to create a comprehensive search environment. For example, a business can integrate its customer relationship management (CRM) data with DeepSeek-V3, allowing employees to conduct holistic searches that combine different data sets. Overall, these features collectively enhance the data search experience, making DeepSeek-V3 a powerful tool for navigating today’s complex data landscape.

How DeepSeek-V3 Works

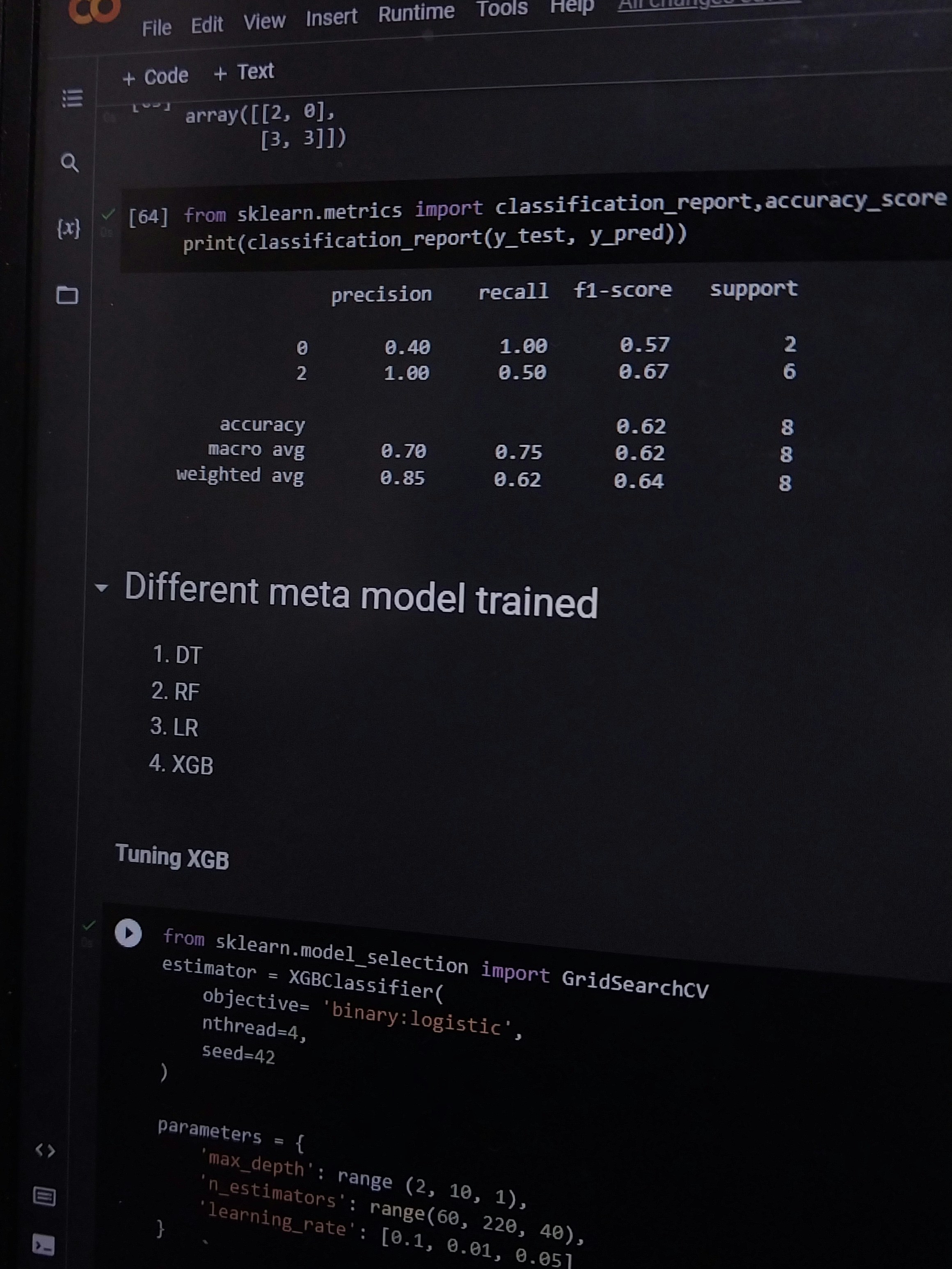

DeepSeek-V3 employs a sophisticated architecture that integrates AI and machine learning algorithms to optimize data search processes. At its core, the system consists of several interconnected modules responsible for data ingestion, processing, and retrieval. This modular design allows for enhanced scalability and adaptability, making it suitable for various applications ranging from enterprise-level databases to smaller-scale applications.

The data processing methodology utilized by DeepSeek-V3 is a multi-step approach that ensures efficient data handling. Initially, data from various sources is ingested through an efficient extraction layer that supports structured and unstructured data formats. This is critical in today’s data landscape, where information comes in diverse forms. Once the data is collected, it undergoes a rigorous cleaning and preprocessing phase, where irrelevant or duplicate information is eliminated, ensuring that users receive accurate and relevant results.

Central to DeepSeek-V3’s functionality is its AI-driven search engine. Utilizing advanced algorithms, the system analyzes content and retrieves information using semantic understanding rather than relying solely on keyword matching. This allows it to interpret user queries more effectively, producing results that are contextually relevant and aligned with user intent. Machine learning techniques further enhance this capability; the system learns from user interactions and feedback, progressively refining its search accuracy over time.

The operational efficiency of DeepSeek-V3 can also be visualized through a simplified flowchart. This chart outlines the sequential steps from data ingestion to final retrieval, emphasizing the interactions among the various system components. Through this integration of AI and machine learning, DeepSeek-V3 stands out as a pioneering solution in the field of data search, showcasing a transformative approach to accessing information in a digital world increasingly inundated with data.

Comparative Analysis: DeepSeek-V3 vs. Traditional Search Tools

The advent of DeepSeek-V3 marks a significant shift in the landscape of data search technologies, particularly when juxtaposed against traditional search tools. Traditional search engines often rely on keyword matching and basic algorithms to retrieve information, which can sometimes lead to irrelevant results or a lack of depth in the information retrieved. In contrast, DeepSeek-V3 leverages advanced artificial intelligence to provide rapid, accurate, and contextually relevant search results.

One of the primary advantages of DeepSeek-V3 is its processing speed. Traditional search tools may require considerable time to sift through vast databases to deliver results. According to recent studies, DeepSeek-V3 can perform queries in mere milliseconds, which represents a considerable improvement over conventional methods. This speed is crucial for businesses and researchers who depend on timely access to data for decision-making.

Accuracy is another area where DeepSeek-V3 excels. Traditional search tools predominantly utilize a rigid algorithm that might misinterpret user intent. However, DeepSeek-V3 employs sophisticated natural language processing techniques, allowing it to grasp the nuances of user queries. As a result, users receive more precise information tailored to their specific needs. Case studies have shown that organizations using DeepSeek-V3 experience a 40% improvement in the relevance of their search results compared to those using traditional tools.

Furthermore, DeepSeek-V3 incorporates intelligent recommendation systems that can suggest relevant data based on user behavior and preferences. Traditional search tools lack this capability, which often leads to a repetitive search experience. With DeepSeek-V3, users encounter personalized suggestions that enhance discovery and facilitate a more productive search experience.

In conclusion, the comparative analysis reveals that DeepSeek-V3 not only surpasses traditional search tools in speed and accuracy but also introduces intelligent recommendations that cater to user preferences. This transformative technology aligns with the evolving demands of data retrieval, ultimately paving the way for a more efficient and satisfying user experience.

Use Cases of DeepSeek-V3

DeepSeek-V3 has emerged as a transformative tool across various industries, providing innovative solutions to challenges related to data search and handling. One notable application is in the healthcare sector, where managing vast amounts of patient data, clinical research, and treatment plans can be overwhelming. DeepSeek-V3 enables healthcare professionals to quickly access and analyze patient histories and medical records, ultimately improving patient care and allowing for more informed decision-making. By utilizing natural language processing and machine learning algorithms, healthcare providers can retrieve relevant information more efficiently, thereby enhancing overall operational efficiency.

Another industry poised to benefit from DeepSeek-V3’s capabilities is finance. Financial institutions deal with enormous volumes of data, including transaction records, regulatory requirements, and market trends. DeepSeek-V3 provides essential tools for financial analysts and auditors to search through disparate data sources, identify irregularities, and ensure compliance. The advanced search functionalities enable quicker retrieval of vital data, which can be pivotal when making timely investment decisions or managing risk. Additionally, the solution’s capability of understanding context allows professionals to conduct comprehensive analytics, further supporting strategic planning and operational effectiveness.

In the realm of research, scholars often face the challenge of sifting through extensive academic articles and data sets. DeepSeek-V3 addresses this issue by simplifying the data discovery process. Researchers can utilize the platform to perform targeted searches across multiple databases, thus streamlining their workflow. Whether it is for literature reviews or data analysis, DeepSeek-V3 empowers researchers by providing them with relevant information efficiently, allowing them to focus on generating insights rather than spending hours searching for necessary data.

These use cases illustrate how DeepSeek-V3 is revolutionizing data search capabilities within healthcare, finance, and research sectors, significantly improving data handling and operational efficiencies.

User Experience and Interface

The user experience (UX) of DeepSeek-V3 plays a pivotal role in its acceptance and effectiveness as a data search tool. The interface is designed to be intuitive, catering to a wide range of users—from seasoned data analysts to those less familiar with advanced search techniques. Feedback from users has indicated that the layout is clean and uncluttered, prioritizing essential features while minimizing distractions. This design philosophy aids in reducing cognitive load, enabling users to focus on the task at hand.

Navigation within DeepSeek-V3 has been streamlined to facilitate quick and efficient searches. The search bar is prominently located, allowing users to initiate queries with ease. Furthermore, well-organized categories enable users to drill down into specific types of data without unnecessary clicks. This hierarchical setup not only enhances usability but also fosters an environment where users can discover new data types relevant to their research seamlessly. Additionally, the incorporation of a visually appealing color palette contributes to a pleasant browsing experience, leading to increased user satisfaction.

The learning curve for new users is another critical aspect of the DeepSeek-V3 interface. Tutorials and tooltips are incorporated throughout the platform, ensuring that essential functions are easily understood and utilized. Feedback gathered from early adopters reflects a positive reception to these resources, indicating that they significantly reduce the time required to become proficient. Even users without technical expertise have reported feeling capable of conducting thorough searches after only a few interactions with the platform.

Through continuous updates based on user feedback, DeepSeek-V3’s interface evolves, maintaining its relevance in an ever-changing data landscape. By prioritizing user needs, the platform not only simplifies data search processes but also maximizes the overall effectiveness of data utilization.

Advantages of Using DeepSeek-V3

The advent of DeepSeek-V3 has marked a significant progression in how organizations approach data search and management. One of the primary advantages of utilizing this advanced AI-driven tool is its capacity to improve efficiency dramatically. Organizations can process vast amounts of data in a fraction of the time it would traditionally take, dramatically enhancing productivity levels. Studies have shown that businesses employing DeepSeek-V3 witnessed at least a 30% reduction in search-related time, allowing for more focus on core activities.

Another crucial benefit is the cost-effectiveness it brings to data handling operations. By streamlining the search process, organizations can reduce resource expenditures related to data retrieval and processing. The reduction in operational costs can reach up to 20%, as the AI minimizes the need for extensive human involvement in mechanical search tasks. This allows companies to allocate their financial resources to strategic initiatives rather than labor-intensive data management processes.

Enhanced data accuracy is yet another advantage that organizations can experience with DeepSeek-V3. This tool employs sophisticated algorithms to enhance the relevance of search results, thereby diminishing the chance of errors and ensuring that users retrieve the most pertinent information. Research indicates that organizations that integrate DeepSeek-V3 report an impressive increase of 25% in data accuracy. This improvement not only aids in immediate decision-making but also fosters long-term strategic planning with reliable data.

Furthermore, better data management is facilitated through the capabilities of DeepSeek-V3. The system allows for more organized storage and retrieval processes, ensuring that employees can access crucial information quickly and efficiently. Effective data management has been linked to improved organizational performance, and those employing DeepSeek-V3 often see a distinct advantage over competitors who rely on conventional methods.

Potential Challenges and Limitations

While DeepSeek-V3 offers significant advancements in data search capabilities through its AI-driven approaches, there are inherent challenges and limitations that users may encounter. One of the primary concerns is the dependency on the underlying quality of data. DeepSeek-V3’s effectiveness is contingent on access to accurate, relevant, and well-structured datasets. If the data input is flawed or not representative, the output results will likely reflect those shortcomings, rendering the system less effective in conveying critical insights.

Another consideration is the initial complexity associated with setting up DeepSeek-V3. Implementing such a sophisticated tool often necessitates extensive technical expertise and resources. Organizations may face challenges regarding integration with existing systems, data preparation, and configuration. These aspects can be daunting, especially for smaller firms lacking specialized IT support. Therefore, adequate planning and training are paramount to ensure successful deployment and operation of the platform.

Furthermore, user resistance can potentially hinder the adoption of DeepSeek-V3. As with any new technology, individuals may be skeptical or hesitant to rely on AI for data-searching tasks, especially if they are accustomed to traditional methods. Overcoming this reluctance requires a concerted effort in terms of education and demonstrating the tangible benefits of DeepSeek-V3. Resistance can stem from fears of job displacement or concerns about AI decision-making processes, which must be addressed through transparent communication and effective change management strategies.

In conclusion, while DeepSeek-V3 presents promising advantages, it is crucial for organizations to be cognizant of these challenges. Addressing data quality, setup complexities, and user acceptance can facilitate a smoother transition to utilizing this innovative search technology, ultimately maximizing its potential benefits.

Future Developments and Enhancements

As technology continues to advance at a breakneck pace, the future of DeepSeek-V3 appears promising, especially in the realm of artificial intelligence and data search capabilities. Several potential enhancements could significantly improve user experience and efficiency in data retrieval. One such enhancement is the integration of more advanced natural language processing (NLP) techniques. Enhancing DeepSeek-V3’s ability to understand and interpret user queries in a conversational manner could facilitate a more intuitive search experience. By employing state-of-the-art NLP frameworks, the system could contextualize queries, yielding more relevant and precise results.

Moreover, the exploration of AI-driven predictive analytics is another area ripe for development. With the right algorithms, DeepSeek-V3 could anticipate user queries based on historical data and usage patterns, streamlining the search process further. This could lead to proactive data suggestion systems that enhance user productivity by reducing the time spent searching for information. The incorporation of machine learning would also allow DeepSeek-V3 to continuously improve its search algorithms based on user feedback and interaction, leading to smarter, more adaptive search capabilities.

Additionally, as data privacy and security become increasingly paramount, future developments could focus on creating more robust security protocols within DeepSeek-V3. Implementing advanced encryption techniques and user authentication systems may ensure that sensitive information remains protected during data searches, fostering user trust in the technology.

Finally, as we observe trends in AI and data search technologies converging, DeepSeek-V3 could potentially expand to encompass multi-modal searches. This involves integrating various data types—text, audio, and visual—into a cohesive search experience. Such innovations will be vital as users demand more versatile and comprehensive data management solutions. The trajectory for DeepSeek-V3 undoubtedly suggests exciting advancements in the near future.