How to Leverage AI for Better User Interactions

- Introduction to Search Behavior

- The Role of AI in Modern Search Engines

- High-Level Overview: What Users Seek

- Reasons for User Drop-Off at AI Overviews

- Statistics on Search Interactions and Drop-Off Rates

- Impact of Content Quality on Search Engagement

- Improving User Journey Beyond AI Overviews

- Case Studies of Successful Engagement Strategies

- Conclusion and Future Trends in Search Behavior

Introduction to Search Behavior

Search behavior refers to the manner in which individuals utilize search engines to find information, products, or services online. Understanding this behavior is essential for digital marketers, website developers, and content creators alike, as it illuminates how users engage with search tools to fulfill their needs. As users interact with search engines, they go through a series of stages, including the formulation of queries, reviewing AI-generated summaries or overviews, and ultimately making decisions based on the information retrieved.

One of the key factors influencing search behavior is search intent, which can be categorized into various types including informational, navigational, and transactional. Users may enter a query seeking straightforward answers, specific website navigation, or detailed insights into purchasing decisions. Recognizing these intent types is crucial as it enables content creators to tailor their offerings to meet user expectations more effectively. Moreover, search intent plays a significant role in how users interact with AI overviews, which can sometimes provide sufficient information to satisfy their queries without delving deeper into the search results.

Furthermore, the user experience during the search process is influenced by numerous factors, including the effectiveness of search algorithms, the design of the search results page, and the relevance of displayed content. A well-structured search experience can facilitate user engagement, while a poorly constructed one may lead to frustration and abandonment of the query. Understanding where users tend to drop off in their search journey can provide valuable insights. By analyzing these drop-off points, stakeholders can identify areas for improvement, enhancing the overall efficacy of search engine interactions.

In summary, appreciating the dynamics of search behavior is integral for optimizing content and user experience on digital platforms. By examining user intent and interactions with AI-generated overviews, one can better understand the patterns and expectations that underpin online search activities.

The Role of AI in Modern Search Engines

In recent years, the integration of artificial intelligence (AI) in search engines has fundamentally transformed the way users access and interact with information online. AI algorithms play a pivotal role in enhancing search results by utilizing advanced machine learning and natural language processing techniques. This innovation allows search engines to understand queries in a more nuanced manner, thereby delivering more relevant content to users.

Machine learning models are adept at recognizing patterns within vast amounts of data. These models analyze user behavior, including click-through rates, time spent on pages, and past search histories, to adapt and refine search results. As users continually interact with search engines, machine learning enables these platforms to evolve, tailoring responses to individual preferences while maintaining a seamless experience. This adaptability is vital, as it ensures that users are presented with the most pertinent information without having to navigate through unrelated or less useful results.

Natural language processing (NLP) further elevates the effectiveness of AI in search engines. By understanding the context and semantics behind user queries, NLP allows search engines to interpret language subtleties, including idioms, synonyms, and variations in phrasing. This capability is especially important in accommodating the diverse linguistic styles of users, as it ensures that everyone can find the information they seek regardless of how they phrase their search query. As a result, the quality of search results improves significantly.

Additionally, personalized content delivery has become a hallmark of AI-driven search engines. By leveraging user data, AI can curate tailored search results that reflect individual interests, enhancing user satisfaction. This personalization not only increases engagement but also fosters trust in search engine platforms as they consistently provide relevant and valuable information. The continuous advancement of AI in search technologies sets the stage for an enriched user experience, transforming how individuals navigate the digital landscape.

High-Level Overview: What Users Seek

When it comes to online searches, users frequently encounter AI-generated overviews that provide a high-level summary of information. These overviews are designed to give immediate responses, encapsulating essential details in a user-friendly format. Common features of AI overviews include succinct bullet points, concise summaries, and direct answers to specific queries. This presentation style is particularly effective in catering to users with time constraints or those seeking quick insights into a topic.

The format of these overviews can significantly influence user engagement. For instance, bullet points allow for rapid scanning, making it easier for users to absorb key information without diving deep into a more extensive text. Similarly, succinct summaries encapsulate broader topics, guiding users who may not have the expertise to interpret complex data. Direct answers, often highlighted at the top of search results, effectively draw attention and give users an immediate satisfaction of their informational needs.

However, while these features are appealing, they may not entirely fulfill users’ deeper search intentions. Many individuals enter queries with specific information needs, looking for detailed analysis, expert opinions, or comprehensive data that a brief overview simply cannot provide. Consequently, the reliance on AI overviews can lead to high drop-off rates, as users may feel that their inquiry remains insufficiently addressed. This dichotomy between the convenience of instant answers and the cravings for more in-depth content reflects a critical aspect of search behavior, highlighting the balance between user satisfaction and the complexity of their queries.

Understanding what users genuinely seek in their searches is crucial for content creators and marketers aiming to bridge the gap between high-level overviews and the depth of information that users often desire. By recognizing this phenomenon, strategies can be developed that cater to diverse user needs, enhancing engagement and retention.

Reasons for User Drop-Off at AI Overviews

As digital users sift through vast amounts of content, there are several reasons why many may choose to cease their search upon encountering an AI-generated overview. One significant factor is the perceived insufficiency of information provided in such overviews. While AI summaries aim to encapsulate complex topics succinctly, they often lack the nuanced detail that serious seekers of knowledge typically desire. Consequently, users may find themselves feeling dissatisfied and opt to look elsewhere for more comprehensive resources.

Another contributing aspect to user drop-off is the inherent preference for detailed content among many individuals. For users engaged in thorough research or looking for in-depth understanding, brief overviews can feel inadequate, prompting them to abandon what they perceive as a superficial exploration of the topic. This phenomenon illustrates a gap between the intention behind AI-generated summaries, which is to streamline information consumption, and the actual needs of users who often favor extensive data that can shed light on complex subjects.

Additionally, user browsing tendencies can play a role in this behavior. Many individuals approach online queries with the intention of gathering information through exploration rather than passive reading. When faced with a succinct AI overview, they may feel compelled to browse for other alternatives that offer richer content. This inclination toward exploring various resources rather than digesting short summaries can lead to a quicker disengagement from the AI overview, as users seek more engaging and informative experiences. In summary, the reasons for user drop-off at AI overviews are multi-faceted, indicating a demand for greater depth and interactivity in content delivery.

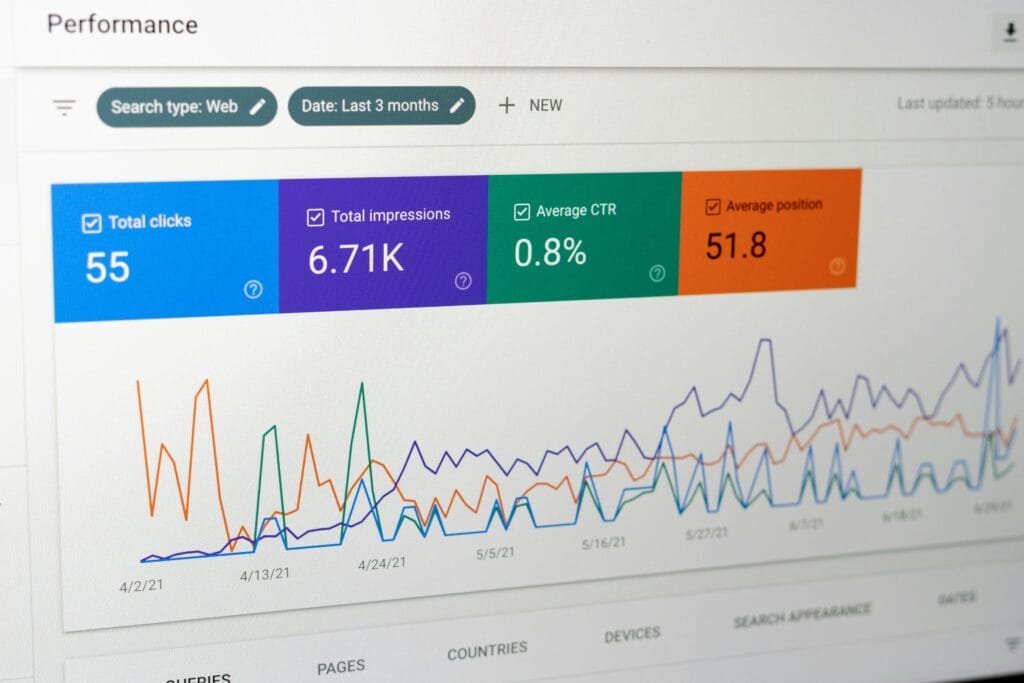

Statistics on Search Interactions and Drop-Off Rates

Understanding user interactions with search engines is essential for enhancing search experience and delivering relevant content. Recent studies highlight that a significant percentage of queries terminate at the AI overview stage, illustrating a common pattern in user behavior. According to recent statistics, approximately 30% of users report that their searches conclude after reviewing the AI-generated overview, suggesting that this initial information often satisfies their immediate needs.

Moreover, engagement rates reveal that less than 20% of users click on links beyond the first page of results, with many opting to review the overview provided by AI instead. This tendency contributes to notable bounce rates; data shows that around 50% of users leave a search results page without interacting further if they perceive their query has been sufficiently addressed by available AI summaries. Consequently, this indicates a lack of motivation to delve deeper into additional resources or links.

Furthermore, a notable aspect of this behavior is the frequency with which users refine their searches. Statistically, around 40% of individuals returning to the search results are inclined to rephrase or adjust their queries after initially relying on the AI overview. This pattern shows a duality in search behavior—the initial dependence on AI summaries, followed by an intent to seek more targeted information when those summaries do not entirely fulfill their inquiry.

In summary, current statistics solidify the understanding that a notable portion of user interactions with search engines concludes at the AI overview stage. The dual trends of high bounce rates alongside the prevalence of query refinement illustrate the complexities of user search behavior and the ongoing necessity for search engines to evolve and meet user demands effectively.

Impact of Content Quality on Search Engagement

Content quality plays a pivotal role in shaping user engagement and retention in the digital landscape. As users navigate through search engine results, they are often met with a myriad of options—some compelling and others lacking substance. High-quality content that is well-structured, relevant, and informative effectively captures users’ attention, encouraging them to explore further rather than abandon the search results. When users encounter content that meets their needs, they are more likely to stay engaged, spending additional time on the site and increasing the likelihood of conversion.

Conversely, when content fails to meet the expectations of users, whether due to a lack of depth, poor organization, or irrelevant information, it can lead to frustration and increased bounce rates. Users are likely to disregard content that is not directly applicable to their queries or that presents information in a convoluted manner. To maintain user interest, content should not only be accurate but also presented in a clear, logical format that enhances readability. This includes utilizing headings, bullet points, and concise paragraphs to facilitate easy scanning of the material.

Moreover, the use of tailored, authoritative content can significantly enhance engagement. When content addresses specific queries and demonstrates a deep understanding of the subject matter, it fosters trust and keeps users coming back for more. Engaging content, which may include a mix of text, visuals, and interactive elements, encourages deeper exploration and generates a community of return visitors. In an era where information is abundant, the quality of content stands out as a critical factor determining user engagement and retention in search behavior.

Improving User Journey Beyond AI Overviews

As users increasingly rely on AI overviews for quick information, it becomes imperative for content creators and website owners to enhance the user journey beyond these initial interactions. One effective strategy is to optimize content for deeper engagement, guiding users towards more comprehensive insights that go beyond surface-level responses. This entails utilizing engaging formats such as infographics, videos, and interactive tools which invite users to delve deeper into the subject matter.

An essential aspect of enhancing user engagement is the strategic placement of related resources. After a user has completed an initial search and reviewed an AI-generated overview, they should be directed to supplementary content that aligns with their interests. This could include articles, case studies, or tutorials that provide additional context and insight, enabling users to explore further without feeling lost or overwhelmed. Implementing user-friendly navigation elements, such as “related articles” or “you might also like” sections, can effectively guide users to relevant information that enriches their understanding.

Moreover, reinforcing the importance of follow-up queries is critical for maintaining a thoughtful user journey. Users should be encouraged to ask more specific questions that can lead to more tailored information. Adding prompts or contextual suggestions for follow-up queries not only enhances user experience but also promotes curiosity and exploration. For example, an AI overview might include questions such as “What are the next steps?” or “How does this apply to my situation?” to provoke further inquiry.

By implementing these strategies, content creators can significantly improve the user journey beyond AI overviews, enabling users to make informed decisions and engage more deeply with the subject at hand. This holistic approach helps users transition from initial curiosity to comprehensive understanding, ultimately enhancing their overall experience with the content.

Case Studies of Successful Engagement Strategies

In the ever-evolving digital landscape, many organizations have successfully recognized the importance of user engagement, particularly after providing AI overviews. One notable case is that of the e-learning platform, Coursera. They implemented a strategy to enhance course recommendations based on users’ previous interactions and AI-generated overviews. By utilizing machine learning algorithms, Coursera not only tailored the content shown to users but also offered additional resources, such as community forums and study groups. As a result, the platform saw a 25% increase in user retention within six months of this initiative.

Another example can be found in the online travel sector with the platform Airbnb. After realizing that many visitors were simply browsing without engaging further, Airbnb introduced personalized AI overviews highlighting popular local attractions and experiences based on user preferences. This integration led to a significant uptick in booking conversions—a 15% increase within the first quarter after implementation. Engaging users in a more meaningful way prior to the booking phase was essential, and the enhanced AI tools played a pivotal role in achieving this goal.

Furthermore, health and fitness applications like MyFitnessPal have utilized similar strategies by combining AI-generated overviews with personalized content. They began offering users a tailored fitness journey based on their previous logs and goals. This approach not only enhanced user experience but also drove a notable increase in app engagement rates, resulting in a 30% rise in daily active users. These examples demonstrate that well-crafted engagement strategies, centered on AI overviews, can significantly impact user retention and overall business outcomes.

Conclusion and Future Trends in Search Behavior

In analyzing search behavior within the context of AI overviews, it is evident that a significant number of queries are being satisfied by summarized information generated by artificial intelligence. Users are increasingly relying on concise, AI-generated responses for quick insights, thus shifting the dynamics of traditional search patterns. This behavior marks a substantial transition, emphasizing the need for both users and content creators to adapt to these changes in information retrieval.

Understanding the factors driving this trend is essential. User expectations have evolved towards immediacy and efficiency, with searches often prioritizing rapid answers over exhaustive exploration. In this landscape, the role of search engines is also transforming. Search algorithms are progressively optimizing for AI-generated content that can effectively engage and address user queries, indicating a potential shift in how search relevance is defined.

Looking towards the future, it is anticipated that advancements in AI technology will further refine user interaction with search engines. As AI continues to develop, the likelihood of personalized search results that adapt to individual queries based on historical behavior increases. This suggests a future where queries may predominantly yield customized AI overviews tailored to user preferences and contexts, potentially diminishing the reliance on traditional links and listings.

Moreover, the integration of conversational AI may further augment the search experience, allowing users to engage in dialogue with search engines. This trend could lead to more nuanced understanding and fulfillment of user intent, enriching the overall effectiveness of information retrieval. As such, stakeholders in the digital communication space must remain vigilant and proactive in recognizing these evolving user behaviors and technological capabilities, paving the way for innovative strategies that align with the anticipated shifts in search behavior.