How to Start a Career in AI for Healthcare

- Introduction to Artificial Intelligence and Healthcare

- Foundational Knowledge in Computer Science

- Statistics and Data Analysis Courses

- Machine Learning and Deep Learning

- Health Informatics and Data Management

- Ethics and Regulations in Healthcare AI

- Interdisciplinary Courses: Combining Healthcare and Technology

- Practical Experience and Industry Exposure

- Conclusion: Charting Your Path in AI for Healthcare

Introduction to Artificial Intelligence and Healthcare

Artificial Intelligence (AI) represents a transformative force within the healthcare sector, offering innovative solutions that enhance patient care, optimize hospital functions, and revolutionize medical research. By leveraging algorithms and machine learning, AI can analyze vast datasets swiftly and accurately, facilitating informed decision-making and promoting better health outcomes. The integration of AI into healthcare not only supports clinicians in diagnostic processes but also allows for personalized treatment plans that cater to the unique needs of each patient.

One significant application of AI in healthcare involves predictive analytics, which enables healthcare providers to anticipate patient needs and allocate resources more efficiently. These advancements can lead to reduced wait times and improved patient satisfaction, ultimately fostering a more responsive medical environment. Furthermore, AI plays a crucial role in clinical trials and medical research, enabling researchers to identify patterns and correlations that were previously overlooked, thus accelerating the development of new treatments and therapies.

The rise of AI in the healthcare domain has prompted the emergence of various job opportunities for professionals interested in this dynamic field. Roles such as AI researchers, data scientists, and machine learning engineers are increasingly in demand as healthcare organizations seek individuals who can harness technology to advance healthcare solutions. Furthermore, cross-disciplinary positions, such as clinical informaticists and biomedical engineers, also reflect the growing convergence of healthcare and technology, highlighting the diverse skill sets required in this evolving landscape.

Overall, the intersection of AI and healthcare is reshaping how medical professionals deliver care and how patients experience health management. As the field continues to expand, the necessity for knowledgeable individuals equipped with the right skills will only become more pressing, ensuring a promising career path for those entering this exciting domain.

Foundational Knowledge in Computer Science

To effectively enter the field of Artificial Intelligence (AI) in healthcare, a solid grounding in computer science is essential. Students should prioritize foundational courses that will equip them with the necessary skills to understand and develop AI systems. Key areas of focus include programming languages, data structures, algorithms, and software development practices.

Programming languages are the building blocks for creating any AI application. Python stands out as a preferred language due to its simplicity and rich libraries tailored for data analysis and machine learning, such as TensorFlow and Keras. Java is also highly relevant, especially in large-scale systems where performance and scalability are crucial. Mastering these programming languages will allow students to write efficient code and explore AI concepts effectively.

Understanding data structures is another critical aspect of computer science that aids in manipulating and organizing data efficiently. Knowledge of arrays, linked lists, trees, and graphs is vital as these structures can significantly affect the performance of algorithms used in AI. Furthermore, algorithms form the heart of AI systems; thus, students should delve into both classical algorithms and those specific to machine learning and data processing.

Moreover, proficiency in software development methodologies ensures that students can participate in collaborative projects, adhere to coding standards, and understand the software life cycle. This knowledge is essential in healthcare AI environments where designs must be reliable and seamlessly integrated into existing systems.

In summary, a foundation in computer science is indispensable for anyone aspiring to work in AI within the healthcare sector. These essential courses not only prepare students to grasp complex AI concepts but also enable them to contribute meaningfully to advancements in healthcare technology.

Statistics and Data Analysis Courses

In the rapidly evolving field of artificial intelligence (AI), particularly within healthcare, a strong foundation in statistics and data analysis is essential. Professionals aspiring to work with AI applications must acquire knowledge in both descriptive and inferential statistics, which form the bedrock for making informed decisions based on healthcare data. Descriptive statistics allow practitioners to summarize and visualize data trends, which is invaluable for understanding patient demographics, treatment outcomes, and other key metrics.

Moreover, inferential statistics are crucial for making predictions and drawing conclusions about larger populations based on sample data. This element becomes increasingly vital as healthcare organizations utilize AI models to improve patient care and operational efficiency. For example, understanding confidence intervals and hypothesis testing helps in assessing the effectiveness of AI algorithms used for predictive analytics in patient management.

Furthermore, knowledge of probability theory plays a significant role in managing uncertainty in healthcare data. Probability distributions, risk assessment, and event modeling are central components that AI professionals must master to evaluate the predictive capabilities of algorithms effectively. By incorporating these statistical principles, practitioners can interpret the outputs of AI without falling prey to misinterpretations that may arise from flawed data analysis.

Additionally, learning various data analysis techniques, including regression analysis, data visualization, and machine learning methodologies, enhances the ability to derive insights from complex datasets. Familiarity with software tools and programming languages such as R and Python can significantly augment one’s skills in executing robust data analyses, ultimately facilitating the integration of AI solutions in healthcare scenarios.

In conclusion, courses in statistics and data analysis not only empower individuals to handle and interpret healthcare data effectively but are also pivotal in supporting the data-driven decision-making processes that drive successful AI implementations in the healthcare sector.

Machine Learning and Deep Learning

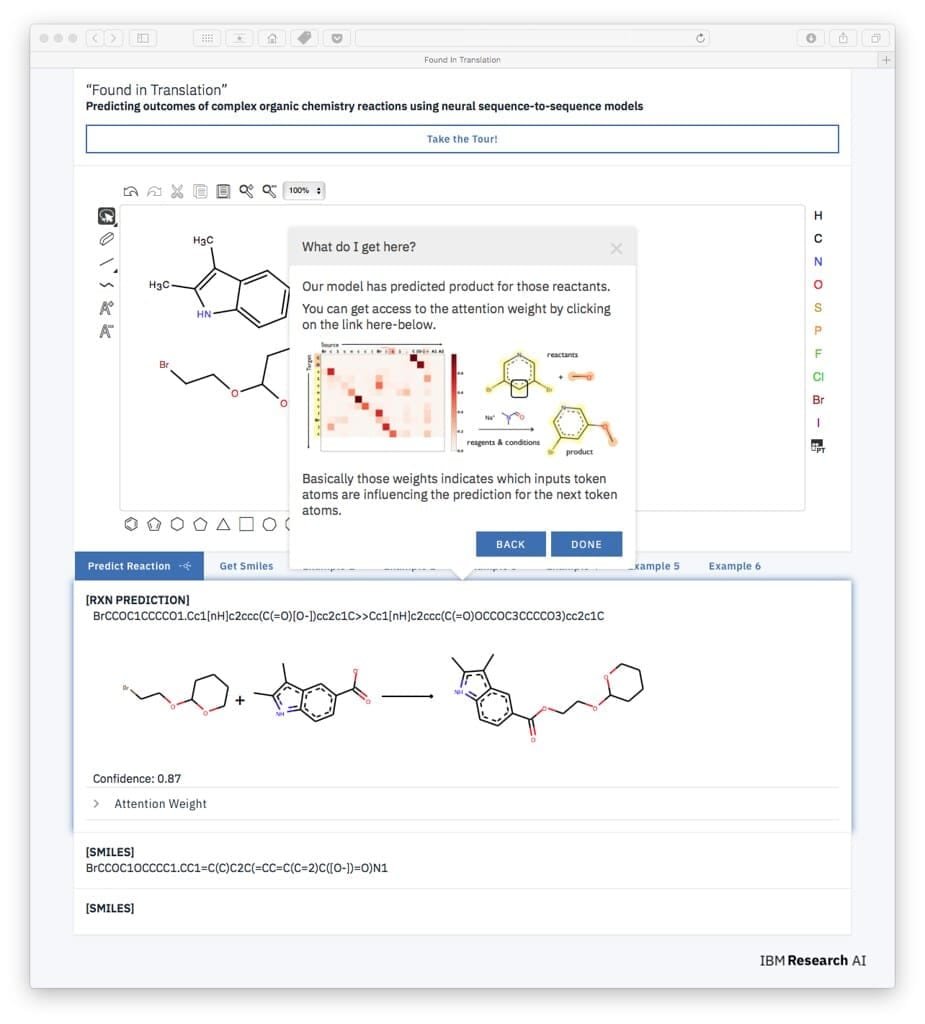

Machine learning (ML) and deep learning (DL) have become integral components of artificial intelligence in healthcare, driving numerous advancements and applications within this field. Aspiring professionals in AI within the healthcare sector should prioritize acquiring knowledge in these areas through specialized courses that cover essential concepts, algorithms, and technologies.

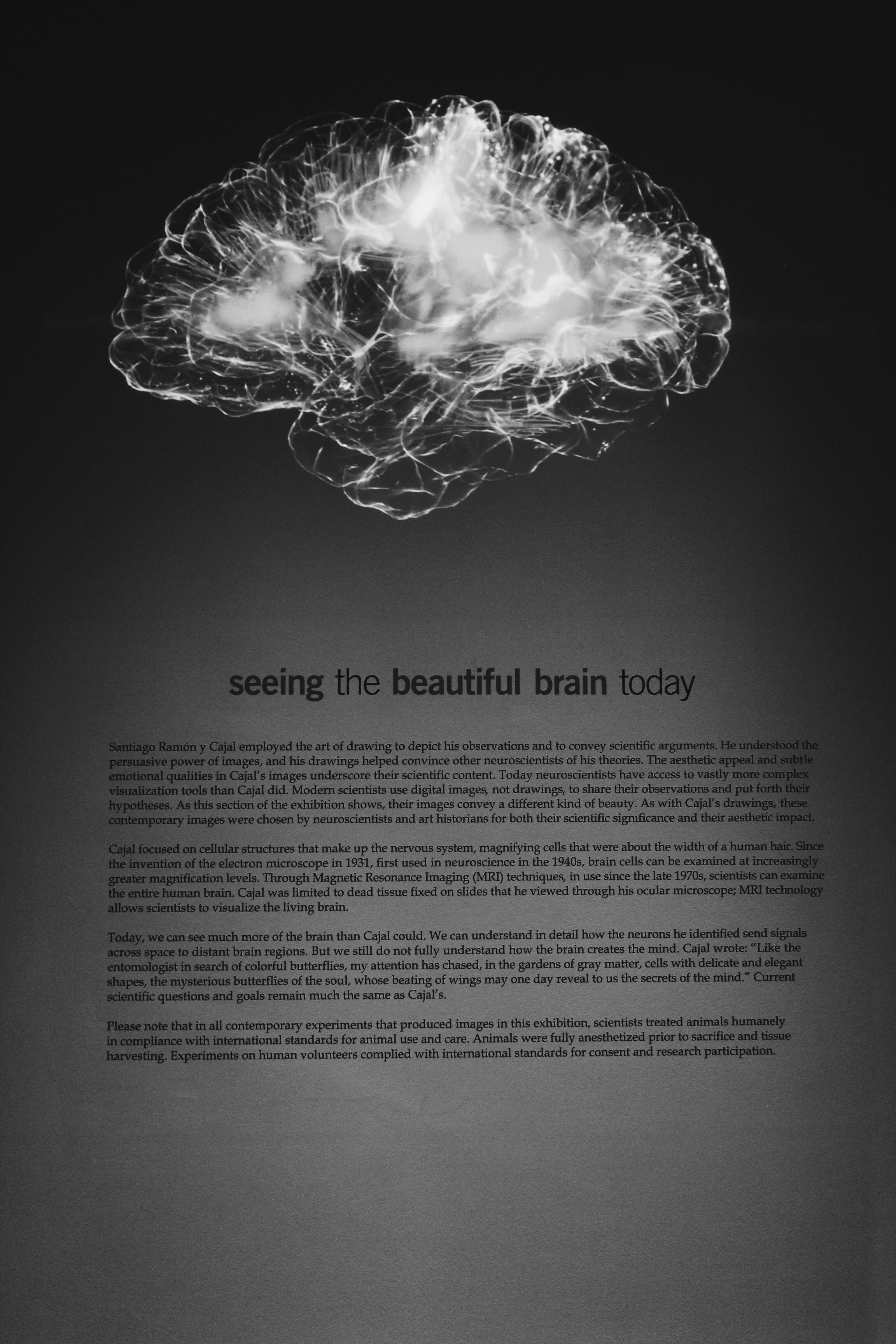

Fundamentally, machine learning involves training algorithms on datasets so that they can make decisions or predictions based on new data. Key concepts in ML encompass supervised learning, unsupervised learning, and reinforcement learning. Courses focusing on these areas will provide a robust understanding of algorithms such as linear regression, decision trees, and support vector machines. The utilization of ML in healthcare is particularly prominent in predictive analytics, where algorithms analyze patient data to predict outcomes such as disease progression and treatment effectiveness.

Deep learning, a subset of machine learning, employs artificial neural networks to process vast amounts of data with multiple layers of neurons. This technique allows for automatic feature extraction and has revolutionized fields such as image processing and natural language processing. In healthcare, DL is used extensively for tasks such as medical image analysis, enabling algorithms to identify abnormalities in X-rays, MRIs, and CT scans with remarkable accuracy. Courses that delve into deep learning should cover essential neural network architectures, including convolutional neural networks (CNNs) and recurrent neural networks (RNNs), both of which are pivotal in extracting insights from complex healthcare data.

By engaging in comprehensive training in machine learning and deep learning, learners will be well-equipped to contribute to innovative AI solutions in healthcare, ultimately enhancing patient care and operational efficiency. The integration of these technologies has the potential to transform diagnostics and treatment methodologies, making it imperative for future professionals to master these critical skills.

Health Informatics and Data Management

Health informatics and data management comprise a vital discipline that bridges healthcare with technology and data analytics. This field is increasingly important as healthcare systems evolve, necessitating professionals who can effectively manage and utilize healthcare data to enhance patient care and operational efficiency. Comprehensive coursework in health informatics typically covers essential topics such as healthcare data systems, electronic health records (EHR), and the interoperability of data across different platforms and systems.

One significant aspect of health informatics is the management of electronic health records. Familiarity with EHR systems is crucial, as these digital platforms store vast amounts of patient data that healthcare providers need to provide quality care. A solid understanding of EHR systems not only facilitates better patient management but also assists in data analysis, clinical research, and compliance with legal and regulatory standards.

Moreover, modules focusing on data interoperability are essential for anyone entering the field of artificial intelligence (AI) in healthcare. This interoperability allows different health information systems to communicate, ensuring that healthcare professionals have seamless access to patient data. The ability to integrate data from various sources supports the development of AI algorithms, which can ultimately lead to improved diagnostic tools and personalized treatment plans.

Additionally, courses in health informatics often cover analytical techniques and tools that enable the interpretation and visualization of healthcare data. Skills in data management are paramount, as they empower professionals to extract valuable insights from data, thereby driving evidence-based decision-making in healthcare environments. In summary, pursuing coursework in health informatics equips aspiring professionals with the competencies required to navigate the intersection of healthcare and technology, ultimately fostering advancements in the application of AI solutions in the healthcare sector.

Ethics and Regulations in Healthcare AI

As the integration of artificial intelligence in healthcare continues to grow, it is imperative for professionals entering this domain to have a thorough understanding of the ethical and regulatory landscapes that govern its implementation. Courses that focus on bioethics, data privacy laws, and responsible AI development practices are essential for ensuring a commitment to ethical guidelines and compliance with legal standards.

Bioethics courses typically explore the moral issues arising in medical and biological research, particularly as they pertain to technologies such as AI. Understanding these ethical frameworks is crucial for those designing or implementing AI systems in healthcare, as it provides guidance on issues such as informed consent, the impact of AI on patient autonomy, and the potential for bias in AI algorithms.

Additionally, familiarizing oneself with data privacy laws, such as the Health Insurance Portability and Accountability Act (HIPAA), is fundamental. HIPAA establishes standards for the protection of health information, and compliance with these regulations is a critical aspect of operating within the healthcare sector. Many educational courses now offer specific modules dedicated to understanding these legal frameworks, emphasizing the importance of safeguarding patient data while harnessing the capabilities of AI technologies.

Moreover, responsible AI development practices are vital for maintaining fairness and accountability in healthcare applications. This includes learning about bias detection and mitigation, transparency in AI algorithms, and the importance of ongoing evaluations of AI systems to ensure that they operate within ethical boundaries. Courses that cover these topics facilitate the cultivation of a responsible technology culture, equipping emerging professionals with the knowledge necessary to address potential ethical dilemmas effectively.

Interdisciplinary Courses: Combining Healthcare and Technology

The integration of healthcare and technology has become increasingly crucial in the field of artificial intelligence (AI) in healthcare. Interdisciplinary studies that bridge these domains empower future professionals to tackle complex challenges effectively. Courses that delve into both healthcare systems and technological tools prepare individuals to design and implement AI solutions that enhance patient care and improve health outcomes.

One important area of focus is biomedical engineering, which combines principles of engineering with medical sciences. This field equips students with skills to develop healthcare technologies, including diagnostic devices and treatment methods that leverage AI. Understanding the mechanical and biological aspects of medical devices can lead to innovations that revolutionize patient care.

Similarly, public health courses provide an essential framework for understanding population health dynamics. By exploring health data analytics and epidemiological methods, students can learn to harness AI to identify trends, predict disease outbreaks, and evaluate the effectiveness of health interventions. Public health professionals equipped with technological knowledge can facilitate data-driven decision-making at various levels of healthcare systems.

Health policy courses also play a pivotal role in this interdisciplinary approach. As healthcare systems evolve, policymakers must make informed decisions that take into account the impacts of AI. Understanding policy frameworks allows individuals to advocate for ethical AI usage and ensure compliance with regulations while promoting advancements that are beneficial to public health.

Overall, a well-rounded education that emphasizes the intersection of healthcare and technology through interdisciplinary courses provides aspiring professionals with the versatility needed to effectively implement AI in healthcare. Such comprehensive training not only enhances problem-solving capabilities but also fosters innovation within the field.

Practical Experience and Industry Exposure

Acquiring practical experience is an essential aspect for individuals aspiring to enter the field of artificial intelligence in healthcare. The combination of theoretical knowledge and hands-on skills significantly enhances a student’s ability to navigate the complexities of this interdisciplinary domain. Internships, research projects, and workshops create valuable opportunities for students to apply their academic learnings in real-world scenarios.

Internships are particularly beneficial, as they allow students to immerse themselves in the working environment of healthcare institutions or technology companies. During these periods, students can engage with professionals, contribute to ongoing projects, and gain insights into the operational aspects of AI applications in healthcare settings. This experience not only enriches their resumes but also helps in forming crucial industry connections that may facilitate future employment.

Research projects are another avenue through which students can gain practical experience. Collaborations with academic institutions or hospitals can offer students the chance to engage in AI-focused research initiatives. These projects may include developing predictive analytics models for patient care, investigating machine learning algorithms for diagnostics, or exploring the integration of AI in telemedicine. Such initiatives allow students to contribute to significant advancements while honing their skills in data analysis and algorithm development.

Moreover, workshops and seminars provide additional platforms for skill enhancement. These events often feature industry experts discussing the latest trends and technologies in AI and healthcare. Participating in these workshops allows students to keep abreast of current developments, learn about tools and technologies used in the field, and develop a network of contacts that can be advantageous for their career paths.

Conclusion: Charting Your Path in AI for Healthcare

As the field of artificial intelligence continues to expand within healthcare, it has become increasingly vital for aspiring professionals to equip themselves with the right knowledge and skills. The essential courses discussed throughout this blog post serve as a foundational framework for those looking to enter this dynamic sector. By focusing on areas such as data analytics, machine learning, and healthcare ethics, individuals can develop an interdisciplinary skill set that prepares them for the multifaceted challenges of AI in healthcare.

To pursue a career in AI within the healthcare industry, potential candidates should aim to create a well-rounded educational journey that not only emphasizes technical proficiency but also an understanding of healthcare systems and patient-centered care. Combining traditional coursework with practical experience through internships, projects, or collaborative research can yield a rich learning environment. This balance allows individuals to bridge the gap between technology and healthcare, catering to the needs of diverse stakeholders.

For those interested in enhancing their career prospects, keeping pace with emerging technologies in AI and their applications in healthcare is crucial. Engaging in continuous learning—whether through online courses, workshops, or professional conferences—can bolster one’s expertise and adaptability in a rapidly evolving field. Additionally, connecting with professionals and mentors already working at the intersection of AI and healthcare can provide valuable insights and networking opportunities.

In summary, the journey towards a fulfilling career in AI for healthcare demands a strategic approach to education and skill acquisition. With a robust foundation built on the essential courses and a commitment to lifelong learning, individuals can effectively position themselves to contribute meaningfully to the future of healthcare technology.